Chapter 4 Measurement Properties of the CDI

Many researchers are initially shocked to hear that one of the most important methods for studying child language is parent report. Aren’t parents extremely biased observers? Yet, as we argued in Chapter 2, alternative methods like naturalistic observation or lab experiments can also be biased, and are quite costly to revisit at scale. Thus, the goal of this chapter is to revisit the strengths and weaknesses of parent report in depth, since the remainder of our book depends on the use of CDI data.

Our goal is to assess the psychometric utility of the CDI. Many studies provide evidence for reliability in the form of concurrent and longitudinal correlations between CDI scores and for validity in the form of correlations between the CDI and other language measures; some of the most prominent of these studies are cited below and a number of others others are reviewed in Fenson et al. (2007). We also address some issues that have received a little less attention, however. In the first part of the chapter, we discuss the limitations of the CDI (and the design features that address these limitations); in the second part, we use longitudinal data to examine the test-retest reliability of the CDI; and in the third part, we present evidence for the measurement properties of the CDI (including comprehension questions) from a psychometric perspective.

4.1 Strengths and limitations of parent report

Although the standardization of parent report using the CDI contributes to the availability of large amounts of data in a comparable format, there are significant limitations to the parent report methodology that are important to understand (Tomasello and Mervis 1994; Feldman et al. 2000). To begin to do so, it is useful to reflect on what it means when a parent reports that their child “understands” or “understands and says” a word.

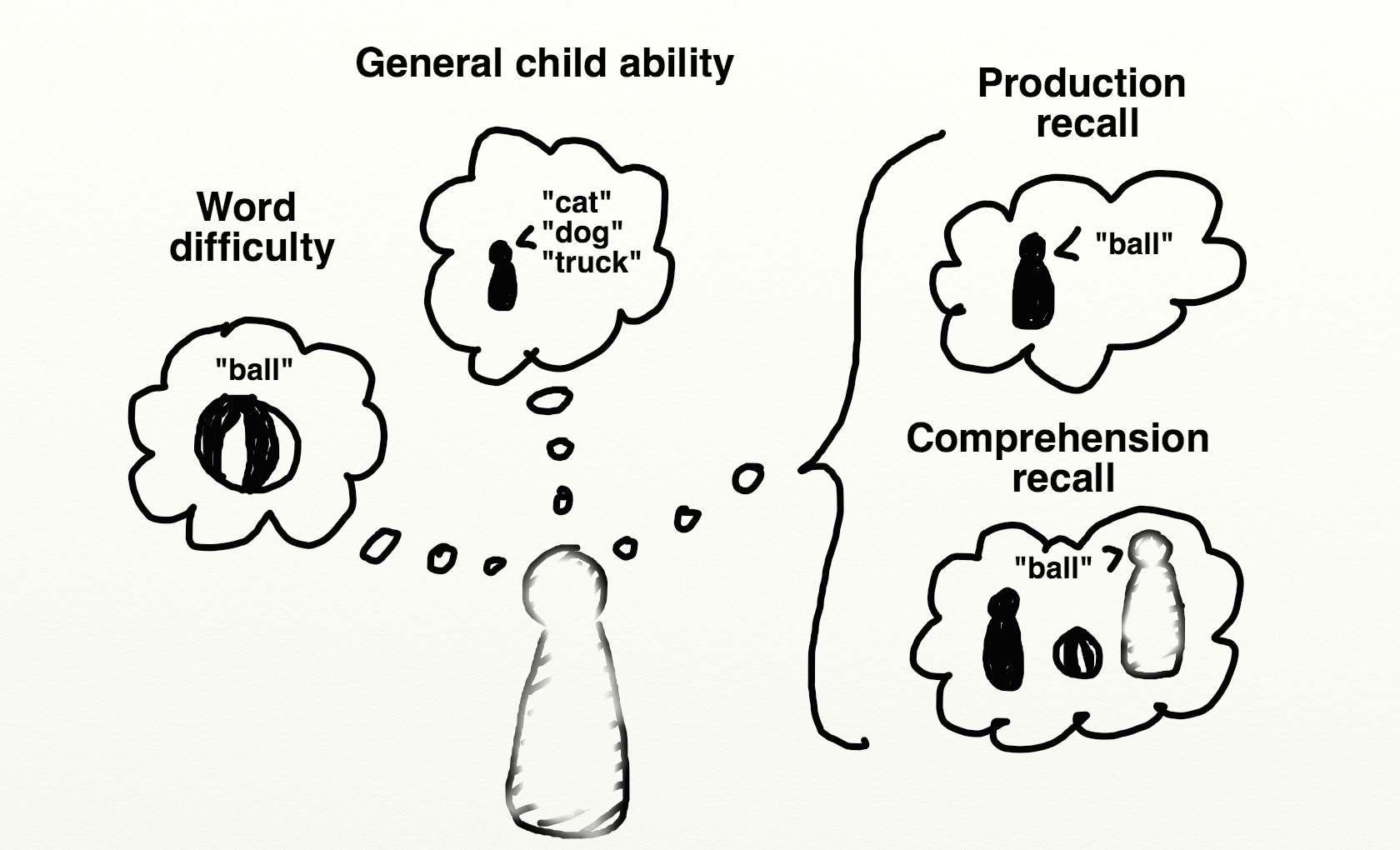

Figure 4.1: The intuitive structure of parent report.

In an ideal world, the parent’s responses would be an unbiased reflection of their observations of their child’s language development. But parent reports are almost certainly less transparent. Figure 4.1 shows a caricature of the process of parent report. A particular report could depend on direct recall of a particular case when their child actually produced or showed comprehension of the word. For example, when asked if their child produces the word ball, a parent is likely recalling situations in which their child has used the word ball correctly, and then reporting on the success or failure of this process of recollection. Of course, this judgment clearly depends on the parent’s ability to accurately judge that the child intended to say the word ball, that the child’s target word form was ball, and that the child has some meaning for the word form ball that at least approximates the expected meaning. But, in addition to these factors, parents probably draw on their general assessment of the difficulty of the word and on their overall assessment of the child’s linguistic abilities. As even this simple sketch shows, parent report judgments are based on a fairly complex set of factors. Hence, there are legitimate concerns about the ability of parents to provide detailed and specific knowledge about their children’s language. We discuss specific concerns below.

First, parents are imperfect observers. Most parents do not have specialized training in language development, and may not be sensitive to subtle aspects of language structure and use. Further, a natural pride in the child and a failure to critically test their impressions may cause parents to overestimate the child’s ability; conversely, frustration in the case of delayed language may lead to underestimates. Parent report is most likely to be accurate under three general conditions: (1) when assessment is limited to current behaviors, (2) when assessment is focused on emergent behaviors, and (3) when a recognition format is used. Each of these conditions acts to reduce demands on the respondent’s memory. In addition, parents are likely to be better able to report on their child’s language at the present time than at times past and better able to report specific items when their child is actively learning those particular items (e.g., reporting on names for animals after a trip to the zoo).

Following (3), one particular key design principle of the CDI is that parents are better able to choose from a list of items that are likely candidates (recognition), rather than requiring that the parents generate the list themselves (recall). Although this second type of assessment sounds implausibly bad, it is surprising how often it is still used (or, even worse, asking the global question “Does your child know at least 50 words?” that is so commonly used in pediatric assessments).

CDI forms are also designed around commonsense age limitations on parent report. In typically-developing samples, the assumption is that parents can track their child’s comprehension vocabulary to about 16–18 months, after which the number of words a child can understand is thought to be too large to monitor. For productive vocabulary, the assumption is that specific word productions can be monitored until about 2.5–3 years, after which the number of words a child can say becomes too large. Different instrument developers make different choices about the ceiling of CDI-type forms but relatively few have considered CDI-type parent report for measuring older children’s vocabularies (but cf. Libertus et al. 2015).

Second, parent reports likely suffer from a number of biases that interact with sub-portions of the forms and the ages of the target children. For example, it is likely that parents may have more difficulty reporting on children’s comprehension or production of function words (e.g., so, then, if), perhaps because these words are more abstract and less referential, than content words (e.g., baby, house). Estimates for function words may then rely more on parent estimates of the words’ general difficulty, rather than actual observations. We return to this question below in our psychometric analyses.

In addition, asking parents to reflect on their child’s language abilities may be particularly difficult for early vocabulary and especially for early comprehension. As Tomasello and Mervis (1994) point out, for the youngest children, especially 8–10 month olds, vocabulary comprehension scores can be surprisingly high, possibly reflecting a lack of clarity in what the term “understands” means for parents of children at this young age (cf. Chapter 3, subsection on “difficult data”). On the other hand, more recent evidence has suggested that children in this age range do plausibly have some comprehension skill even if it is somewhat fragmentary (Tincoff and Jusczyk 1999, 2012; Bergelson and Swingley 2012, 2013, 2015). Thus, the degree to which very early comprehension reports are artifactual – or were actually ahead of the research literature – is unknown. (Resolving this question will require detailed studies of the correspondence between parent reports and experimental data for individual children). Below we assess some of the measurement properties of comprehension items, but we are unable to resolve the issue fully.

One study that bears on the earliest production data is Schneider, Yurovsky, and Frank (2015), who compiled a number of sources of data on children’s first words. Surprisingly, that study found relatively few differences for the age and topic distribution of this very salient milestone across datasets collected via a number of different methods, including concurrent (CDI) and retrospective report. The age at which a first word was reported was also relatively similar between CDI data and the concurrent diary reports of a sample of psycholinguists (though some CDI data appeared to be shifted a little bit earlier such that more parents were reporting first words in the 7–9 month period). Thus, there was convergence across different reporting methods in parents’ report on first word production. Parent report could be flawed here, but the specific CDI format may not be to blame.

Third, there is some evidence that variability in reporting biases may be moderated by factors such as SES (Feldman et al. 2000, 2005; L. Fenson, Bates, et al. 2000). Some studies suggest that parents from some SES groups may be more likely to underestimate child’s abilities (Roberts, Burchinal, and Durham 1999), while others report that parents from lower-SES groups may over-estimate children’s abilities, especially comprehension at younger ages (Goldfield and Reznick 1990; Feldman et al. 2000). Later studies, however, have shown that for children over 2 years patterns of validity were consistent in lower and higher-SES groups (Feldman et al. 2005; Reese and Read 2000). Thus, SES-differences could reflect valid delays in children’s language development that parallel those obtained with different methods, such as naturalistic observation or standardized tests (e.g., Hammer, Farkas, and Maczuga 2010).

Fourth, as discussed in Chapter 2, the items on the original CDI instruments were chosen to be a representative sample of vocabulary for the appropriate age and language (Fenson et al. 1994). The checklists contain some words that most, including the youngest, children are able to understand or produce, some words that are understood or produced by the “average” child, and some which only children who are relatively more advanced will understand or produce. This structure ensures that the list has the psychometric property of capturing individual differences in vocabulary both across younger and older children and across children of different developmental levels. Validity of the CDIs has been demonstrated in reference to both standardized tests and naturalistic language sampling (see Chapter 4 of Fenson et al. 2007).

The checklists were not originally constructed with the assumption that responses on individual items would be reliable and valid, however. (Indeed, as we show below, not all words have ideal psychometric properties – e.g., “mittens”). While item-level responses provide useful information about patterns of words that children are likely to understand or produce, responses on the vocabulary checklist do not necessarily license the conclusion that a child would respond appropriately when asked “can you say ______?” by an experimenter in a confrontation naming task. Nonetheless, if parents’ observations at the item level reflect any signal – even in the context of significant influence from other factors – then this signal should be observable by aggregating together data from many children. Thus, the item-level analyses we present in Chapter 10 (for example) are not predicated on an assumption of high item-level reliability for individual children.

Fifth, while the lengths of the vocabulary checklists on the CDIs may give the impression that they yield an estimate of the child’s full vocabulary, in fact, the vocabulary size estimates only reflect a child’s relative standing compared to other children assessed with the same list of words. Such estimates should not be misconstrued as a comprehensive estimate of the child’s vocabulary knowledge, as CDI scores likely understate the size of a child’s “true” vocabulary substantially, especially for older children.

Sixth, when a parent reports on a word on the vocabulary checklist, there is no information about the actual form of the word used, and hence, these vocabulary estimates can say little about phonological development (e.g. segmental vs. suprasegmental approaches to the analysis of speech). Parents are instructed that they should check that a child can produce a word even if it is pronounced in the child’s “special way,” and only approximates the adult form. Thus, throughout this book we refrain from analyzing the phonological forms of words reported on CDI instruments (with the exception of Chapter 10, in which we use word length in the correct adult form as a predictor of production).

Finally, we also gain no information from parent report about the frequency with which children use a particular word in their spontaneous speech, nor can we know the range of contexts in which individual lexical items are used (e.g., is that word used productively vs. in a memorized chunk of speech). Thus, the vocabulary size that is captured by the CDIs reflects the number of different word types that the child is able to understand or produce, with little information about nuances in meaning that might be reflected in actual usage.

Despite these limitations, when used appropriately, the CDI instruments yield reliable and valid estimates of total vocabulary size. Because the instruments were designed to minimize bias by targeting current behaviors and asking parents about highly salient features of their child’s abilities, they have proven to be an important tool in the field. Dozens of studies demonstrate concurrent and predictive relations with naturalistic and observational measures, in both typically-developing and at-risk populations (e.g., Dale and Fenson 1996; Thal et al. 1999; Marchman and Martínez-Sussmann 2002). In addition, a variety of recent work has shown that individual item-level responses can yield exciting new insights, for example about the growth patterns of semantic networks when aggregated across children (Hills et al. 2009, 2010). Such analyses have the potential to be even more powerful when applied to larger samples and across languages.

4.2 Longitudinal stability of CDI measurements

A classic test of the reliability of a psychometric instrument is its test-retest correlation. Assessing this correlation for CDIs for a single reporter is a bit impractical however, since – unlike e.g., a math test with objective answers and different question forms – this procedure would involve asking a caregiver to fill out the exact same survey twice in a row, and presumably they would remember many of their answers. An alternative possibility would be to measure the same child via multiple caregivers. This procedure was followed by De Houwer, Bornstein, and Leach (2005), who found that caregivers varied substantially from one another in their responding; but plausibly this is due not only to parent bias but also to the different contexts in which caregivers interact with children (e.g., one caregiver takes the child to the zoo more often, another plays kitchen at home).

Avoiding the issues of these procedures, we instead examine correlations in CDI measurements across developmental time. There are only a small number of deeply longitudinal corpora in Wordbank, so we will limit our investigation to two languages: Norwegian and English. Furthermore, the largest group of longitudinal data cover the WS form so we restrict to these data for simplicity. Within each of these datasets, the modal number of observations is two, but there are some children with more than 10 CDIs available.

In this type of analysis, differences between a particular individual’s measurements could vary for two primary reasons: first, measurement error (parent forgetfulness, mistakes, etc.) and second, true developmental change (learning new words). Since vocabulary typically increases over time, we can look at the relative magnitudes of CDI scores via correlations; this is our first analysis. Our second analysis attempts to normalize these absolute differences by extracting percentile ranks and finds that this procedure in fact increases longitudinal correlations. Because there are two sources of differences between measurements, when correlations are low, we do not have direct evidence for whether 1) children’s relative linguistic abilities are shifting with respect to one another or 2) we are observing measurement error. But, when correlations are high, we can assume the converse: measurement error is low and developmental stability is relatively high. It turns out that this latter situation is the case. As we will discuss in more detail in Chapter 5, there is substantial variability between children in vocabulary size. The current analysis suggests that this variability appears to be quite stable longitudinally.

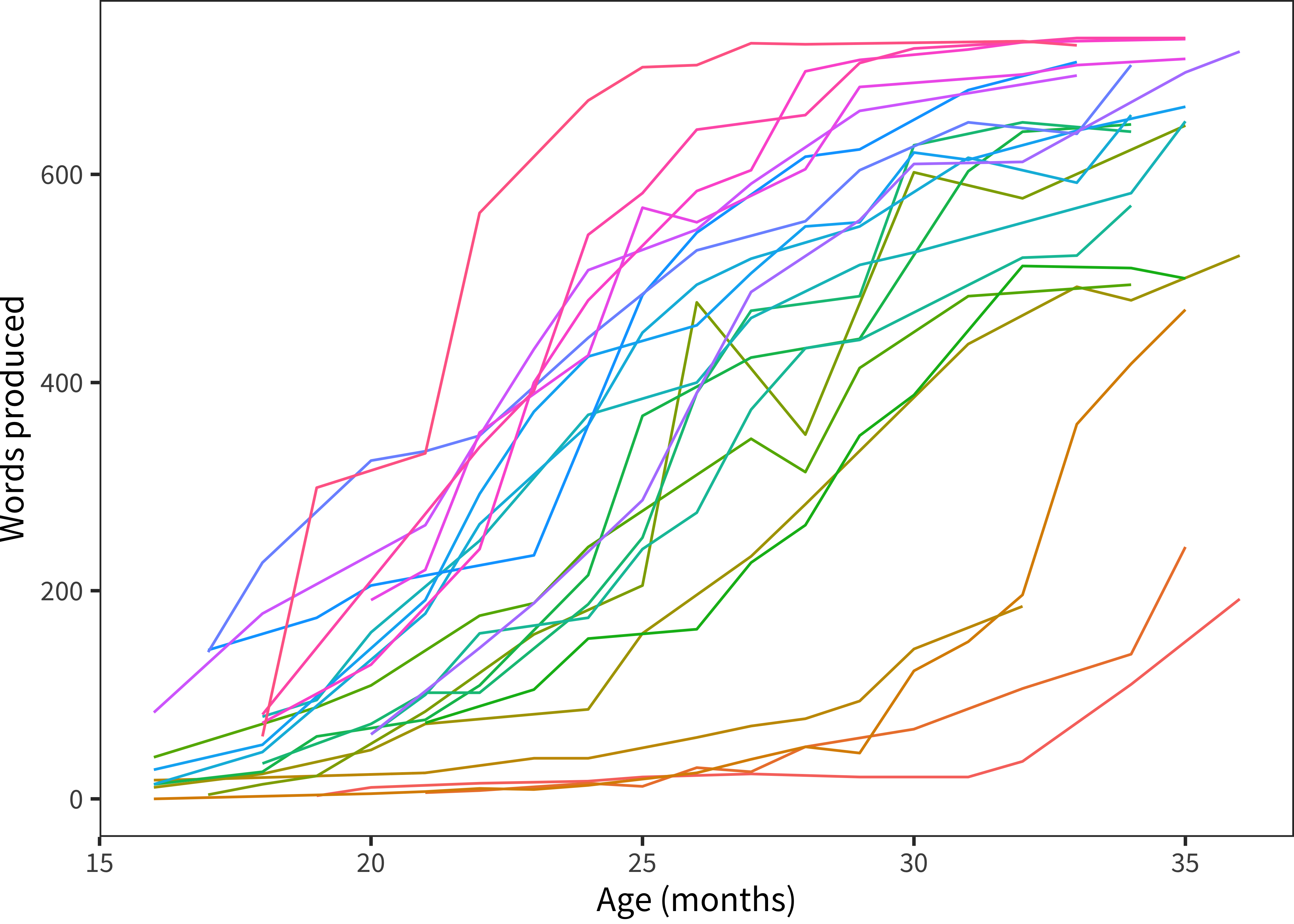

Figure 4.2 shows the trajectories of children (individual colors) who were measured more than ten times; it includes Norwegian data only, due to data sparsity issues in English. These trajectories appear quite stable; the ranking of individuals does not appear to change much over the course of several years. This general conclusion – longitudinal stability of language ability as well as limited measurement error – is ratified by other studies using different datasets, for example Bornstein and Putnick (2012), who found substantial stability (r = 0.84) between latent constructs inferred from early language at 20 months and later language measured at 48 months.

Figure 4.2: Vocabulary size as a function of age for children with more than 10 administrations (color indicates child).

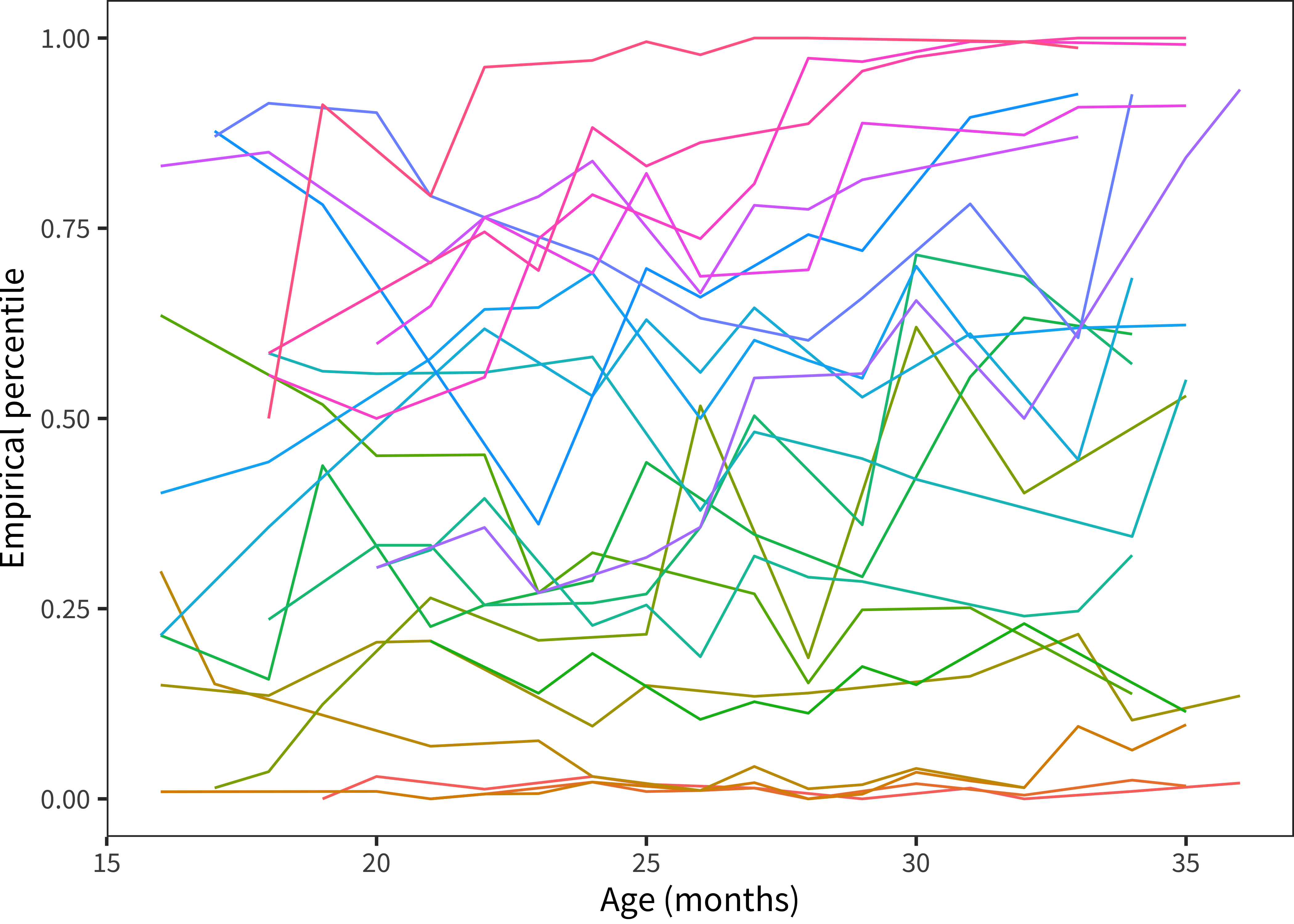

One way to operationalize the question of stability is how children’s percentile ranks tend to change over time. We examine this question qualitatively by showing the longitudinal trajectory of individual children’s empirical percentile ranks based on the full normative sample for that language.10 As shown in Figure 4.3, these ranks are visually quite stable.

Figure 4.3: Vocabulary percentile as a function of age for children with more than 10 administrations (color indicates child).

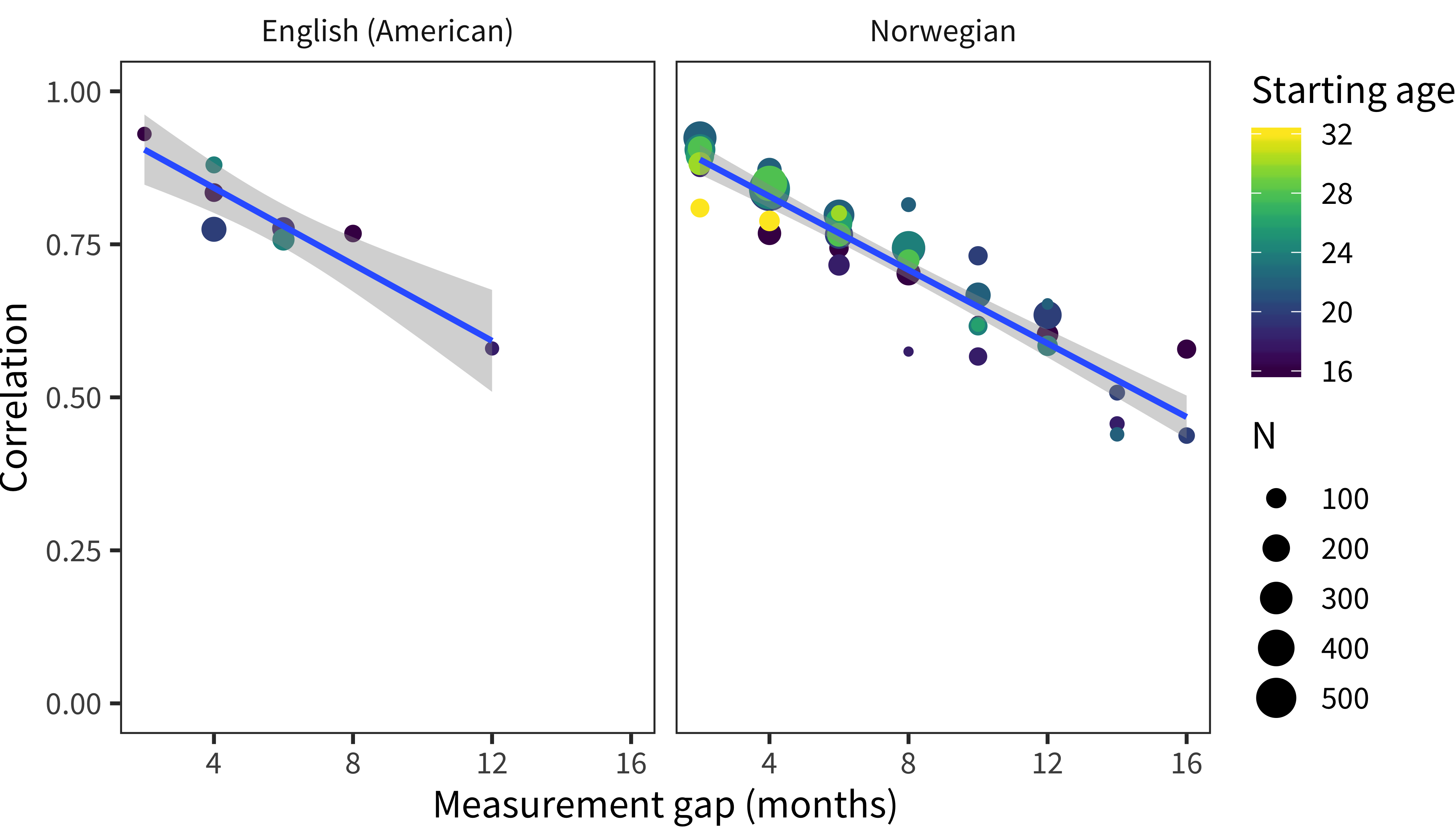

The transformation to percentile ranks allows us to assess the correlation between a child’s percentile rank at time 1 and their rank at time 2, depending on the gap between these two. Because of sparsity, we bin children into two-month age bins and eliminate age bins with fewer than 50 children, then calculate between-bin correlations in percentiles. Figure 4.4 shows this analysis, which reveals that percentile ranks are quite stable. Regardless of the age of the children, across a 2–4 month age gap the two percentiles are correlated at better than 0.8.

Longitudinal stability declines to around 0.5 at a maximal remove of 16 months, but this decline should be taken with a grain of salt. First, a 16 month gap amounts to a doubling of the child’s age, so stability might be expected to be lower. Second, many children who are measured longitudinally across a 16-month gap will be expected to move from the floor of the form to the ceiling, compromising measurement accuracy. To test this last hypothesis, we evaluated the longitudinal stability of correlations using the same analysis as above, but varying whether we used raw scores or percentiles. The percentile method substantially increased correlations.11

Figure 4.4: Correlations between vocabulary percentiles at multiple age points as a function of the age difference between them.

In sum, the variability between children that we observe in the CDI is quite stable longitudinally. It declines over time, but some of this decline may simply be due to the unavoidable limitations of CDI forms with respect to floor and ceiling effects.

4.3 Psychometric modeling

In this next section, we examine the psychometric properties of the CDI through the lens of psychometric models and Item Response Theory (IRT). In brief, IRT provides a set of models for estimating the measurement properties of tests consisting of multiple items. These models assume that individuals vary on some latent trait, and that each item in a test measures this latent trait to some, possibly variable, extent (see Baker 2001 for detailed introduction). IRT models are a useful tool for constructing and evaluating CDI instruments, as they can help to identify items that perform poorly in estimating underlying ability. For example, WEBER et al. (2018) used IRT to identify poorly-performing items in a new CDI instrument for Wolof (a language spoken in Senegal). IRT can also be used in the construction of computer-adaptive tests; this method has recently been applied to the CDI (Makransky et al. 2016; cf. Mayor and Mani 2018).

IRT models vary in their parameterization. In the simplest (Rasch) IRT model, each item has a difficulty parameter that controls how likely a test-taker with a particular ability will be to get a correct answer. In contrast, in a two-parameter model, each item also has a discrimination parameter that controls how much response probabilities vary with varying abilities. Good items will tend to have high discrimination parameters across a range of difficulties so as to identify test-takers at a range of abilities. Three- and four-parameter models add items for estimating lower- and upper-bounds of responding for individual items.

We examine IRT models as a window into the psychometric properties of the CDI. In the first subsection, we explore latent factor scores using the English WS data. In the second subsection, we examine individual items and find generally positive measurement properties, although with some items at ceiling (included via carry-over from the Words & Gestures form). In the third subsection, we look at differences between comprehension and production in the WG form. In the fourth subsection, we look at the properties of the instrument by word category in both WS and WG.

Overall, the conclusions of our analysis are that:

- Latent factor scores may have some advantages relative to raw scores in capturing individuals’ abilities, but for the purposes of the analyses we perform in the main body of the book, they may carry some risks as well; hence, we do not adopt them more generally.

- In general, CDI WS items tend to perform well, but from a purely psychometric perspective there are a number of items that could be removed from the English WS form because their measurement properties are not ideal.

- Comprehension items, in general, tend to have less discrimination than production, suggesting that they are not as clear indicators of children’s underlying abilities.

- Function words tend to have lower discrimination than other items, but the lexical class differences are not huge and do not interact with whether they are measured using production vs. comprehension.

These analyses generally ratify the conclusion that the measurement properties of the CDI are good, even for function words and for comprehension measures. These questions may carry slightly less signal about the specifics of a child’s vocabulary and load more heavily on a parents’ general estimation of the child’s linguistic ability, but they do carry some signal that relates to other responses. Further, when the English CDI departs from good measurement practice it generally does so for completeness (e.g., including mom and dad words because these are important to parents, even though they do not show good measurement properties or are just different in some other way).

4.3.1 Measurement properties of individual WS items

A first question that we can ask using a fitted IRT model is how well individual items relate to children’s overall latent abilities. Practically speaking, in these analyses, we use the mirt package (Chalmers 2012; Chalmers and others 2016) to estimate the parameters of a four-parameter IRT model. As described above, the two-parameter model includes difficulty and discrimination parameters for each item. The four-parameter model supplements the standard two-parameter model with two parameters corresponding to floor and ceiling performance for a particular item. Items with high rates of guessing or universal acceptance across test takers would tend to have abnormal values on these bounds.

We fit Rasch, two-, three-, and four-parameter models to the English WS data and performed a set of model comparisons. On all metrics – AIC, BIC, and direct likelihood comparison – the 2PL model handily outperfomrmed the Rasch model, suggesting that not every item had the same discrimination. Similarly, the 3PL outperformed the 4PL on all metrics, suggesting that adding an upper bound parameter did not increase model fit. On the other hand, the 2PL and 3PL were close in fit, with AIC and log likelihood favoring the 3PL but BIC favoring the 2PL. In the remainder of the analyses below save one, we adopt the 2PL for simplicity. In an exploratory analysis, we examine upper and lower bounds from the 4PL because the estimated upper bounds help us reason about those items that are not yet universally known by the older children in our sample.

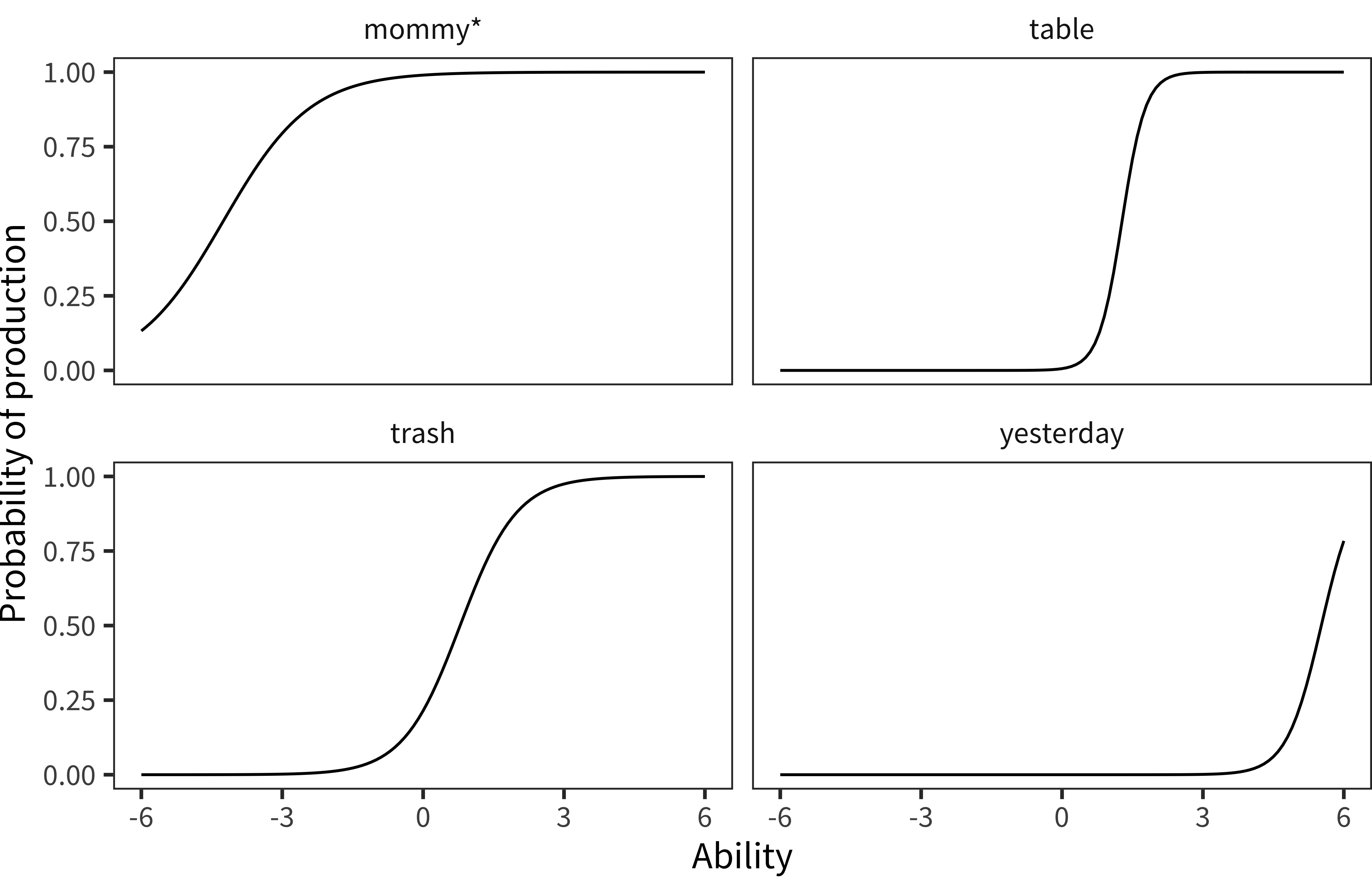

Figure 4.5: Item characteristic curves for a set of individual items from the English WS sample.

We begin by examining some individual item curves from the 2PL fits. Figure 4.5 shows four representative item characteristic curves. Each plots the probability of production by a range of latent ability scores. Mommy is produced by children at all abilities and is relatively uninformative about ability. In contrast, table and trash are both of moderate difficulty, but table is more informative because it has a steeper slope. Finally, yesterday is more difficult overall – in our sample many high vocabulary children still did not produce this word. Generally, items with steeper slopes are considered more diagnostic of ability and hence more desirable.

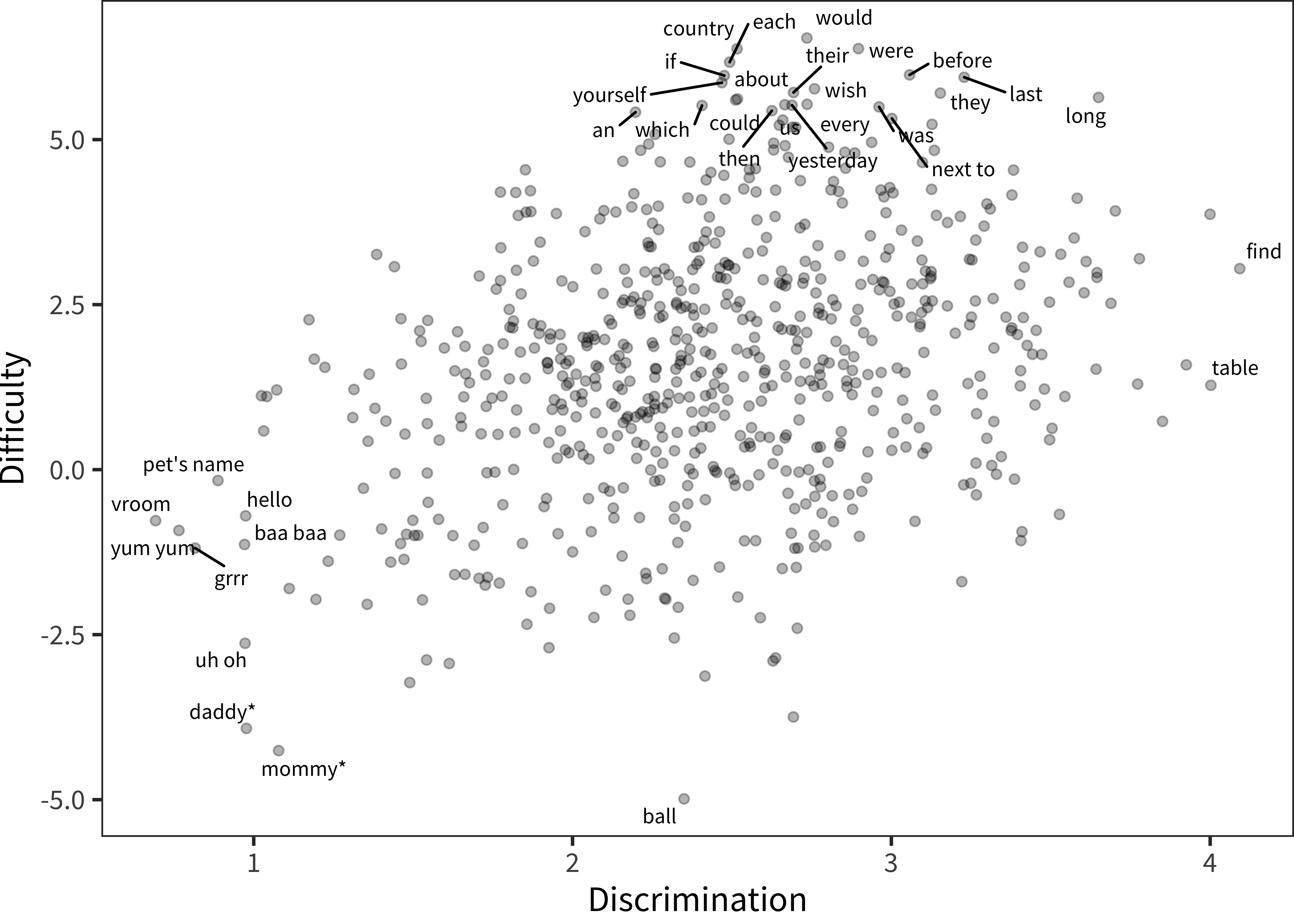

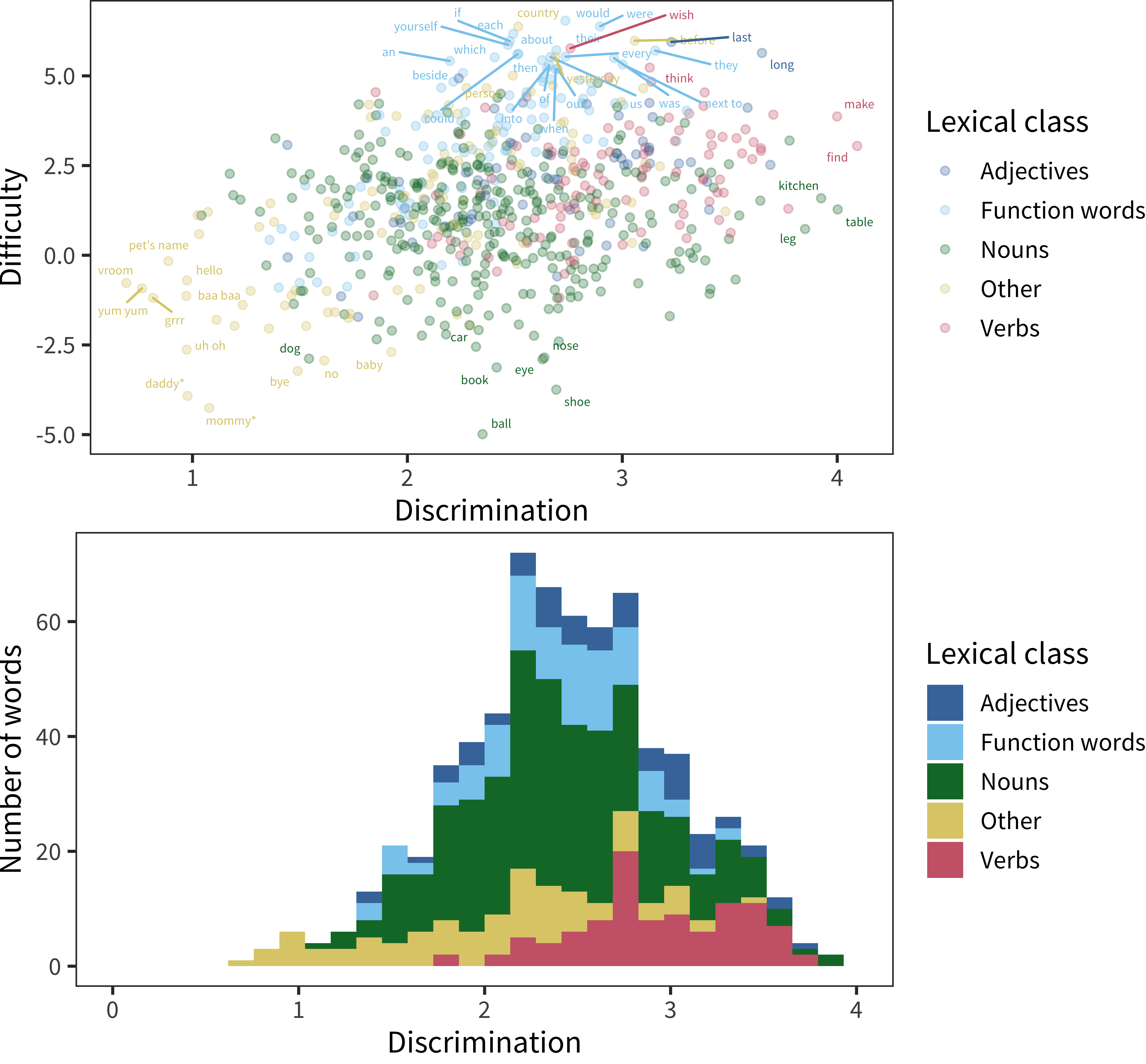

Figure 4.6: Words (points), plotted by their difficulty and discrimination parameters, as recovered by the 2-parameter IRT model (see text). Outliers are labeled.

We now examine these properties across the whole instrument. Figure 4.6 shows item discrimination and difficulty across the full set of items, with outlying items labeled. Difficulty refers to the latent ability necessary for a child to produce an item, on average. Discrimination refers to how well an item discriminates between children of lower and higher ability (as judged by their performance on other items). For example, the word table is spoken by just about half of the children in the sample. Hence, asking whether a child says table is a good way to guess whether they are in the top or bottom half of the distribution.

In contrast, visual inspection shows a tail of items with limited discrimination and low difficulty (e.g., mommy, daddy, uh oh, etc.). These are clearly those items that are produced by nearly all of the children in the sample – they do not discriminate because they are passed by all children in the sample. If the only goal of the instrument were discrimination of different ability levels, they could likely be removed. But, as discussed above, these items tend to be included for completeness. Including these items also helps with compatibility between instruments, since the WS instrument is a strict superset of the WG instrument, which is used with younger children and for which their would presumably be more variability in a word like uh oh. On the upper part of the plot, we also see a large cluster of words that are quite difficult (e.g., country, would, were); these items show some useful discrimination, but presumably only for high ability children.

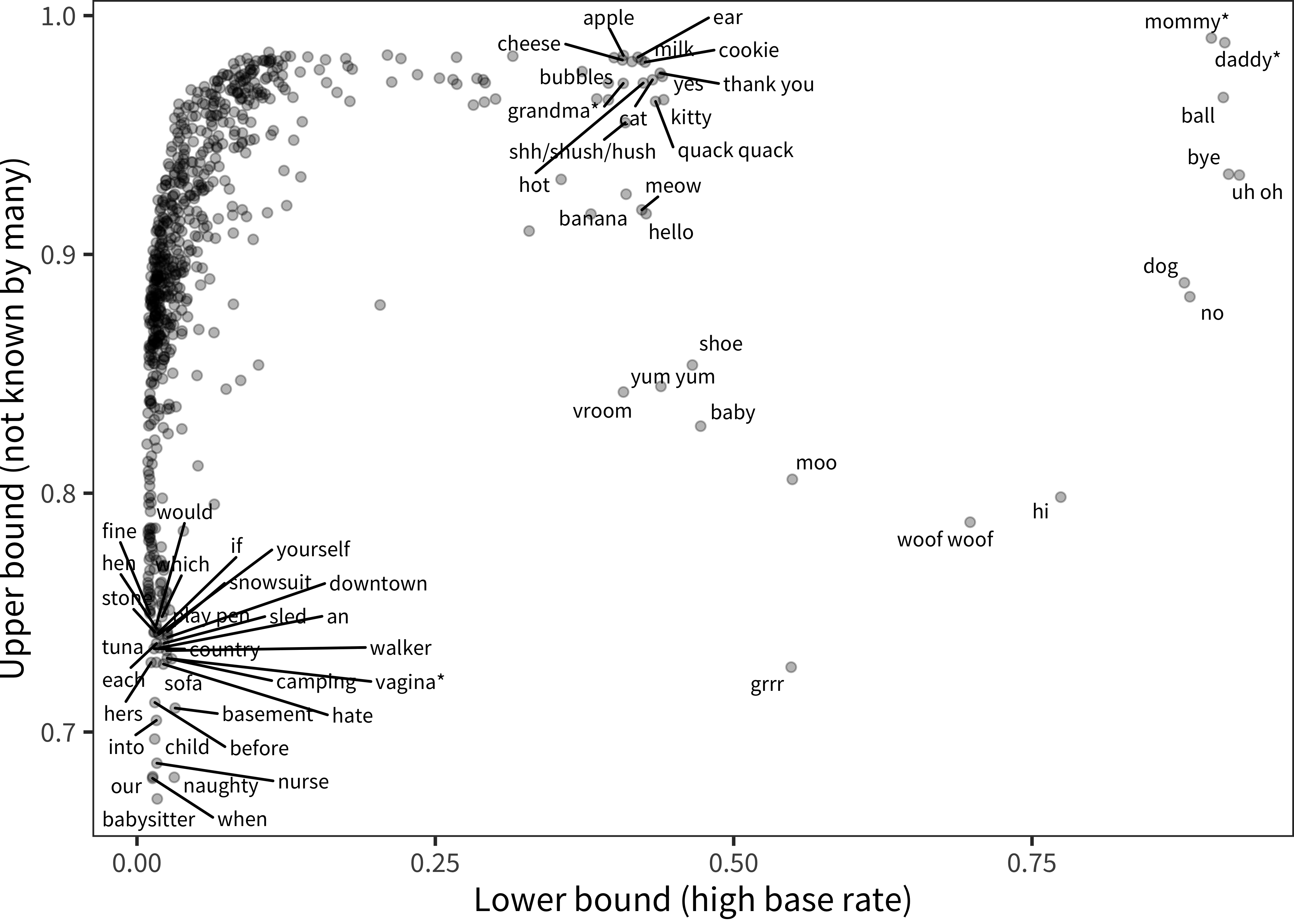

Figure 4.7: Words (points), plotted now by their lower and upper bound parameters from the 4-parameter IRT model.

Turning now to an exploratory analysis using the 4PL model, we examine the recovered upper and lower bounds estimated for particular words, as shown in Figure 4.7. While overall the 4PL model does not improve fit, these parameters are useful because they show the subset of words that are known by only a small number of children (low ceiling) or are known by almost all children (high floor), respectively. Examining those with a very low ceiling, we see items that are likely to be quite idiosyncratic, for a variety of reasons. For example, babysitter, camping, and basement likely vary by children’s home experiences (further mediated by access to resources, parenting practices, and circumstances). In contrast, genital items (e.g. vagina, or the version of this item used in the child’s family) vary by gender (see Chapter 9). Examining those items with a very high floor shows early learned words like mommy. These words are similar to words with very low discrimination patterns. Because these words are known by essentially all children, the four-parameter model may have fit these words as having a high chance level with essentially no discrimination ability.

One way to think about these analyses is that they show that the CDI has not only a large core of words with good measurement properties but also some other words that do not contribute as substantially and add length without adding much signal. If the goal of the CDI were only to provide psychometric estimates of vocabulary size, these would be good candidates for deletion. But because CDIs are also used for other purposes – such as the analyses we present in subsequent chapters – a larger set of items can be useful. We return to this general set of issues in Chapter 18.

4.3.2 Production and comprehension

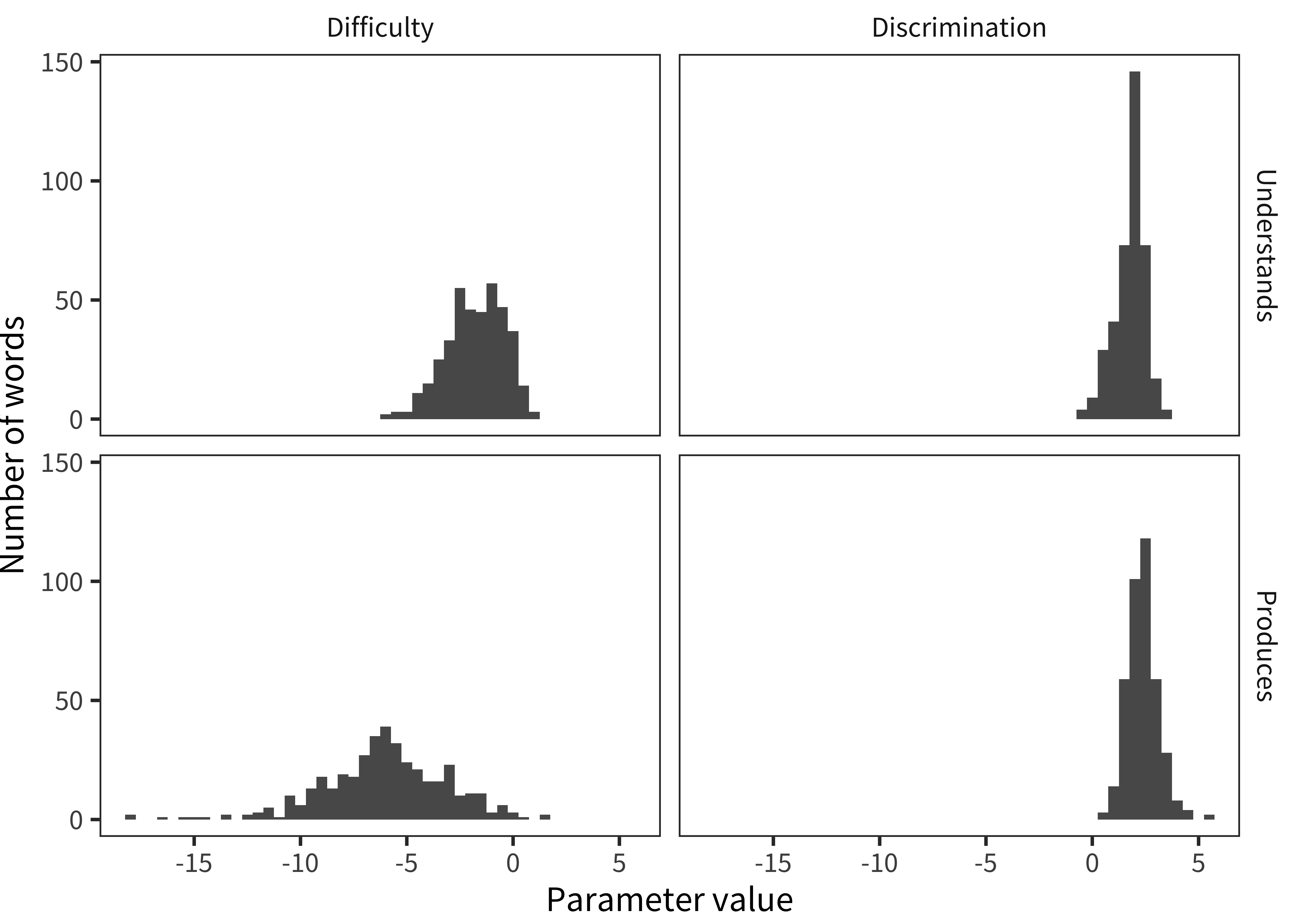

Figure 4.8: Histograms of words’ difficulty and discrimination parameters, for comprehension and production.

We next use IRT to estimate whether there are differences between production and comprehension, using WG data. To do so, we fit 2PL moels to the WG data and examine the distribution of item parameters. In general, a good item distribution will have a range of difficulties, so as to be sensitive to differences between children at a variety of levels. These items also should have relatively high discrimination, so that answers to individual items tend to provide relatively more information.

Figure 4.8 shows discrimination and difficulty parameter value distributions for WG production and comprehension. Difficulty is much higher (negative values) for production relative to comprehension, reflecting the expected asymmetry of production coming “after” (being more difficult than) comprehension. With respect to comprehension, several trends are visible. First, comprehension questions largely have positive discrimination parameters. Thus, these questions on the whole carry signal about children’s latent linguistic ability. There do appear to be more itmes with low or even negative discrimination parameters, however, indicating more items that are not measuring ability appropriately (perhaps because they are difficult for all children or because they are too hard to assess). Mean discrimination is substantially lower for comprehension relative to production (1.8 vs. 2.4).

Overall, this pattern is consistent with the hypothesis that production behavior is a clearer signal of children’s underlying knowledge than assumed comprehension. Why? Perhaps parents are better reporters of production than comprehension, and hence these items are more discriminative of true behavior. The source of error in this case would be parents’ mistaken belief that their child understands a word. Or perhaps comprehension is a fundamentally more variable construct and that, hence, individual word knowledge consistent with understanding could be due to partial knowledge. Here the source of error is variance in how well children know the meanings of words. We cannot distinguish between these two models, but they have different underlying implications for the CDI.

4.3.3 Lexical category effects on item performance

One hypothesis that we have often speculated about is the question of whether there are special psychometric issues with particular word classes. For example, do parents struggle especially to identify whether children produce or understand function words?

Figure 4.9: Lexical class effects on difficulty and discrimination for Words and Sentences. The top plot shows individual words plotted by their parameter values, with color representing the lexical class of the words. The bottom plot shows discrimination information in the form of a histogram.

Using 2PL parameter fits, Figure 4.9 shows WS item difficulty and discrimination (as above) and the histogram of discrimination, but broken down by lexical class (color). Many of the easy, non-discriminating items are found in the “other” section. In contrast, the hardest items tend to be function words. These items tend to have similar discrimination on average (2.4) compared with nouns (2.4), and modestly lower discrimination than adjectives (2.6), and especially verbs (3.0). The situation is not dire: all have a discrimination parameter above one. Thus, although function words are not the most discriminative items on the CDI WS, these items still appear to encode valid signal about children’s abilities.

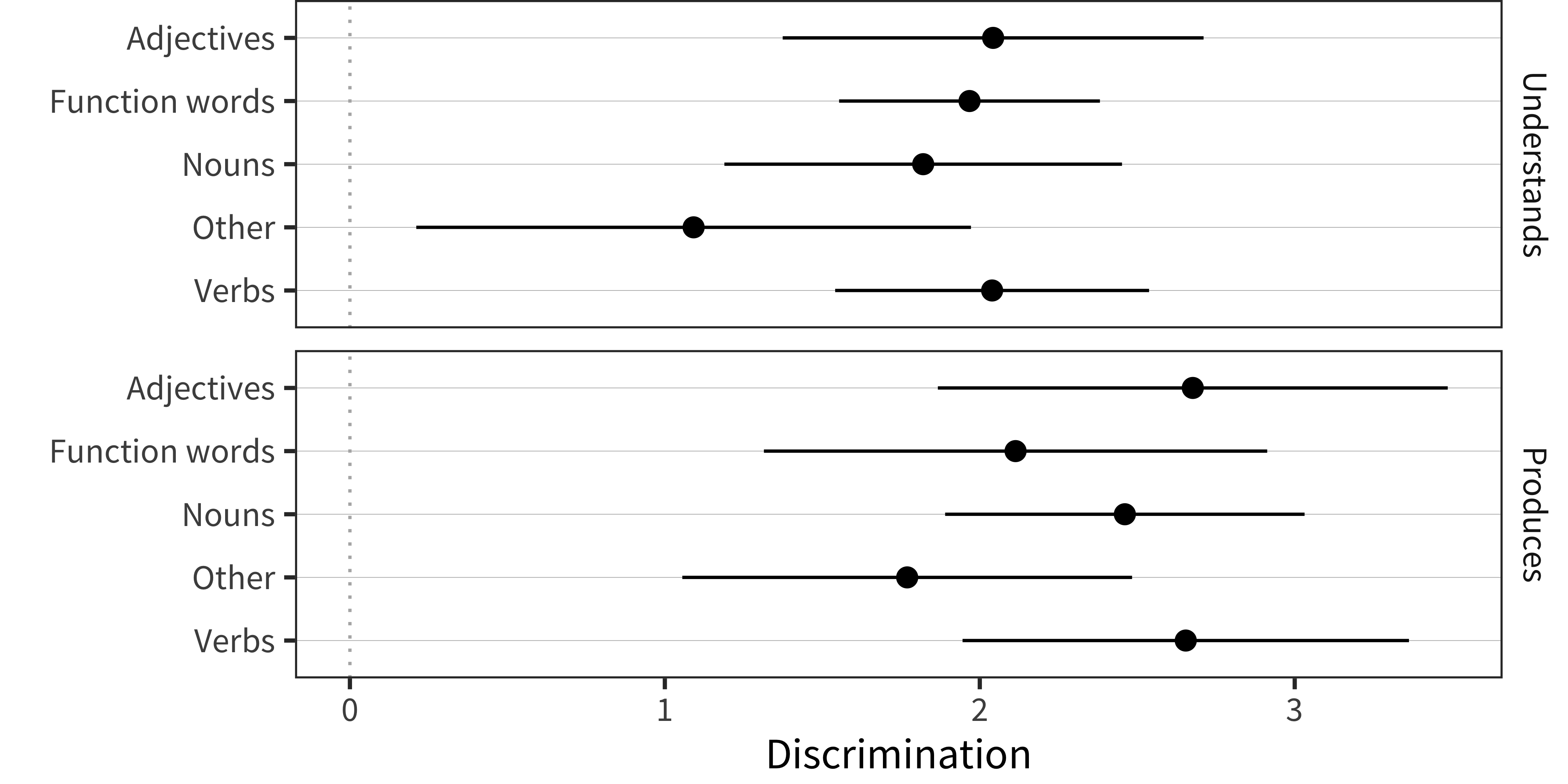

Figure 4.10: Mean discrimination values for individual words’ in production and comprehension measures from the Words and Gestures form (error bars show SD).

In our last analysis, we turn to the WG data. Figure 4.10 shows the mean (error bars show SD) for discrimination parameter values. The only major trend is that there is a moderate level of discrimination for all classes except “other” (which includes items like mommy and daddy and a variety of animal sounds and social routines). One hypothesis about this finding is that, especially early on, parents are very generous in their interpretation of whether their child understands these specific words.

In sum, we do not find evidence that function words are particularly low-performing items from a psychometric perspective – even in comprehension assessments! Rather, there are some low-performing items spread across all categories of the CDI form, and many of these likely perform poorly for the reasons described above – especially difficulty in interpretation of very early behavior and variability in home experience.

4.3.4 IRT models: Conclusions

One question regarding IRT-model derived parameters for individual children is whether they should be used in place of percentiles or raw scores for some of the measurement problems we encounter throughout the rest of the book. Although these latent ability scores might be overall better reflections of children’s vocabulary than other measures, we do not find strong evidence to support that conclusion. For example, in the analysis above, we compared longitudinal correlations derived from raw scores, percentiles, and IRT ability parameters. While IRT parameters yielded higher correlations than raw scores, empirical percentiles performed better still (at least for Norwegian and English, two languages for which we have large amounts of data).

Furthermore, there are other negatives associated with swapping an imperfect but straightforward measure (raw and percentile scores) for a model-derived measure (latent ability). Interpretation clearly suffers if we use the model-derived measure, since readers will not be able to map scores back to actual behavior in terms of the checklist. In addition, model estimation issues across instruments introduce further difficulties in interpretation. Most obviously, model estimates with smaller datasets may vary in unpredictable ways; similarly, a greater presence of poorly-performing items in certain datasets may lead to systematic issues in the latent estimates for those datasets. In the absence of clear solutions to these model-fitting problems, we choose the route of using the “sumscore” (Borsboom 2006), while acknowledging its limitations.

4.4 Conclusions

In this chapter, we examined the measurement properties of the CDI from three perspectives. From a theoretical perspective, we reviewed why the design features of the CDI make it a reasonable tool for measuring child language, even if there are opportunities for error and bias throughout. (Of course, one of these design features is the style of administration for a particular study, so of course a poorly-administered form will yield a dataset with lower reliability and greater bias). Then, we took advantage of the deep longitudinal data available for two languages and showed quite strong longitudinal correlations between CDI administrations. This pattern indicates that early language is a stable construct across development (Bornstein and Putnick 2012). It also signals that measurement error between CDI administrations appears to be limited, at least when the span of time between administrations is not too great. Finally, we used item-response theory to examine the measurement properties of individual items. While the CDI includes some items with limited measurement value (if all that the user cares about is a single ability score), most items show good psychometric properties. This analysis also revealed that comprehension questions and questions about function words do not appear to be particularly worse than other items, contrary to previous speculations. In sum, the CDI appears to be a reliable instrument for measuring children’s early language, with measurement properties that support a range of further analyses.

We could use a model-based method (e.g., the

gcrqmethod used in the Wordbank app and Chapter 5 and 6) but in practice we have enough data in each of these languages that this method should perform well.↩︎We also used latent abilities derived from a 4-parameter IRT model as below. While the IRT-derived ability parameters showed a consistent improvement in longitudinal correlations over the use of raw scores, percentiles realized a further gain over the IRT parameters in this case.↩︎